We build an end-to-end OCR system for Telugu script, that segments the text image, classifies the characters and extracts lines using a language blogger.com classification module, which is the most challenging task of the three, is a deep convolutional neural network Long short-term memory (LSTM) is an artificial recurrent neural network (RNN) architecture used in the field of deep blogger.com standard feedforward neural networks, LSTM has feedback blogger.com can process not only single data points (such as images), but also entire sequences of data (such as speech or video) May 27, · There have been numerous applications of convolutional networks going back to the early s, starting with time-delay neural networks for speech recognition

딥 러닝 - 위키백과, 우리 모두의 백과사전

Zijun Gao and Trevor Hastie. LinCDE: Conditional density estimation via Lindsey's method. Lindsey's method allows for smooth density estimation by turning the density problem into a Poisson GLM.

In particular, we represent an exponential tilt function in a basis of natural splines, and use discretization to deal with the normalization. In this paper we extend the method to conditional density estimation via trees and then gradient boosting with trees. Swarnadip Ghosh, Trevor Hastie and Art Owen. Scalable logistic regression with crossed random effects.

We develop an approach for fitting crossed random-effect logisitic regression models at massive scales, with applications in ecommerce. We adapt a procedure of Schall and backfitting algorithms to achieve O n algorithms.

DINA: Estimating Heterogenous Treatment Effects in Exponential Family and Cox Models. We extend the R-learner framework to exponential families and the Cox model. Here we define the treatment effect speech recognition using neural networks phd thesis 1995 be the difference in natural parameter or DINA.

Stephen Bates, Trevor Hastie and Rob Tibshirani. Cross-validation: what does it estimate and how well does it do it? Although CV is ubiquitous in data science, some of its properties are poorly understood. In this paper we argue that CV is better at estimating expected prediction error rather than the prediction error for the particular model fit to the training set.

We also provide a method for computing the standard error of the CV estimate, which is bigger than the commonly used naive estimate which ignores the correlations in the folds. Kenneth Tay, Balasubramanian Narasimhan and Trevor Hastie.

Elastic Net Regularization Paths for All Generalized Linear Models. This paper describes some of the substantial enhancements to the glmnet R package ver 4. All programmed GLM families are accommodated through a family argument.

Benjamin Haibe-Kains et al. Transparency and reproducibility in artificial intelligence. A note in Matters arising, Nature, where we are critical of the lack of transparency in a high-profile paper on using AI for breast-cancer screening. Lukasz Kidzinski, Francis Hui, David Warton and Trevor Hastie. Generalized Matrix Factorization We fit large-scale generalized speech recognition using neural networks phd thesis 1995 latent variable models GLLVM by using efficient methodology adapted from matrix factorization.

Backfitting for large scale crossed random effects regressions Regression models with crossed random-effects can be very speech recognition using neural networks phd thesis 1995 to compute in large scale applications eg Ecommerce with millions of customers and many thousands of products.

We adapt a backfitting algorithm to problems of this kind and achieve convergence provably linear in the number of observations. to appear, speech recognition using neural networks phd thesis 1995, Annals of Statistics Elena Tuzhilina, Trevor Hastie and Mark Segal. Principal curve approaches for inferring 3D chromatin architecture We adapt metric scaling algorithms to approximate the 3D conformation of a chromosome speech recognition using neural networks phd thesis 1995 on contact maps.

Trevor Hastie. Ridge Regularization: an Essential Concept in Data Science This paper is written by request to celebrate the 50th anniversary of the first ridge-regression paper by Hoerl and KennardTechnometrics. In it I gather together some of speech recognition using neural networks phd thesis 1995 favorite aspects of ridge, and also touch on the "double-descent" phenomenon.

Technometrics online link Kenneth Tay, Nima Aghaeepour, Trevor Hastie, Robert Tibshirani. Feature-weighted elastic net: using "features of features" for better prediction We often have metadata that informs us about features to be used for supervised learning. For example, genomic features may belong to different pathways. Here we develop a method for exploiting this information to improve prediction.

Statistica Sinica Junyang Qian, Yosuke Tanigawa, Ruilin Li, Robert Tibshirani, Manuel A Rivas, Trevor Hastie, speech recognition using neural networks phd thesis 1995. Large-Scale Sparse Regression for Multiple Responses with Applications to UK Biobank We develop methodology to build large sparse polygenic risk score models using multiple phenotypes multitask learning.

Our methodology builds on our early snpnet lasso models for single phenotypes, and includes missing phenotype imputation and adjustment for confounders. Trevor Hastie, Andrea Montanari, Saharon Rosset and Ryan Tibshirani. Surprises in High-Dimensional Ridgeless Least Squares Interpolation.

Interpolating fitting algorithms have attracted growing attention in machine learning, mainly because state-of-the art neural networks appear to be models of this type. In this paper, we study minimum L2-norm "ridgeless" interpolation in high-dimensional least squares regression. We consider both a linear model and a version of a neural network. We recover several phenomena that have been observed in large-scale neural networks and kernel machines, including the "double descent" behavior of the prediction risk, and the potential benefits of overparametrization.

Zijun Gao, Trevor Hastie and Rob Tibshirani. Assessment of heterogeneous treatment effect estimation accuracy via matching We address the difficult problem of assessing the performance of an HTE estimator. Our approach has several novelties: a flexible matching metric based on random-forest proximity scores, an optimized matching algorithm, and a match then split cross-validation scheme.

Statistics in Medicine, April Junyang Qian, Yosuke Tanigawa, Wenfei Du, Matthew Aguirre, Chris Chang, Robert Tibshirani, Manuel A.

Rivas, Trevor Hastie, speech recognition using neural networks phd thesis 1995. A Fast and Scalable Framework for Large-scale and Ultrahigh-dimensional Sparse Regression with Application to the UK Biobank. PLOS Genetics October We develop a scalable lasso algorithm for fitting polygenic risk scores at GWAS scale.

There is also a BiorXiv version. Our R package snpnet combines efficient batch-wise strong-rule screening with glmnet to fit lasso regularization paths on phenotypes in the UK Biobank data. We have an R package fcomplete which includes three vignettes demonstrating how it can be used. Trevor Hastie, Rob Tibshirani and Ryan Tibshirani Extended Comparisons of Best Subset Selection, Forward Stepwise Selection, and the Lasso This paper is a follow-up to "Best Subset Selection from a Modern Optimization Lens" by Bertsimas, King, and Mazumder AoS, We compare these methods using a broad set of simulations that cover typical statistical applications.

Our conclusions are that best-subset selection is mainly needed in very high signal-to-noise regimes, and the relaxed lasso is the overall winner. Scott Powers, Junyang Qian, Kenneth Jung, Alejandro Schuler, Nigam Shah, Trevor Hastie and Robert Tibshirani. Some methods for heterogeneous treatment effect estimation in high-dimensions We develop some new methods for estimating personalized speech recognition using neural networks phd thesis 1995 effects from observational data: causal boosting and causal MARS.

Statistics in Medicine. January Scott Powers, Trevor Hastie and Rob Tibshirani. Nuclear penalized multinomial regression with an application to predicting at-bat outcomes in baseball. Here we use a convex formulation of the reduced-rank multinomial model, in a novel application using a large dataset of baseball statistics. In special edition "Statistical Modelling for Sports Analytics", Statistical Modelling, vol.

Qingyuan Zhao and Trevor Hastie Causal Interpretations of Black-Box Models. We draw connections between Friedman's partial dependence plot and Pearl's back-door adjustment to explore the possibility of extracting causality statements after fitting complex models by machine learning algorithms. Saturating Splines and Feature Selection We use a convex framework based on TV total variation penalty norms for nonparametric regression.

Saturation for degree-two splines requires the solution extrapolates as a constant beyond the range of the data. This along with an additive model formulation leads to a convex path algorithm for variable selection and smoothing with generalized additive models, speech recognition using neural networks phd thesis 1995. JMLR 18 Scott Powers, Trevor Hastie and Robert Tibshirani Customized training with an application to mass spectrometric imaging of cancer tissue.

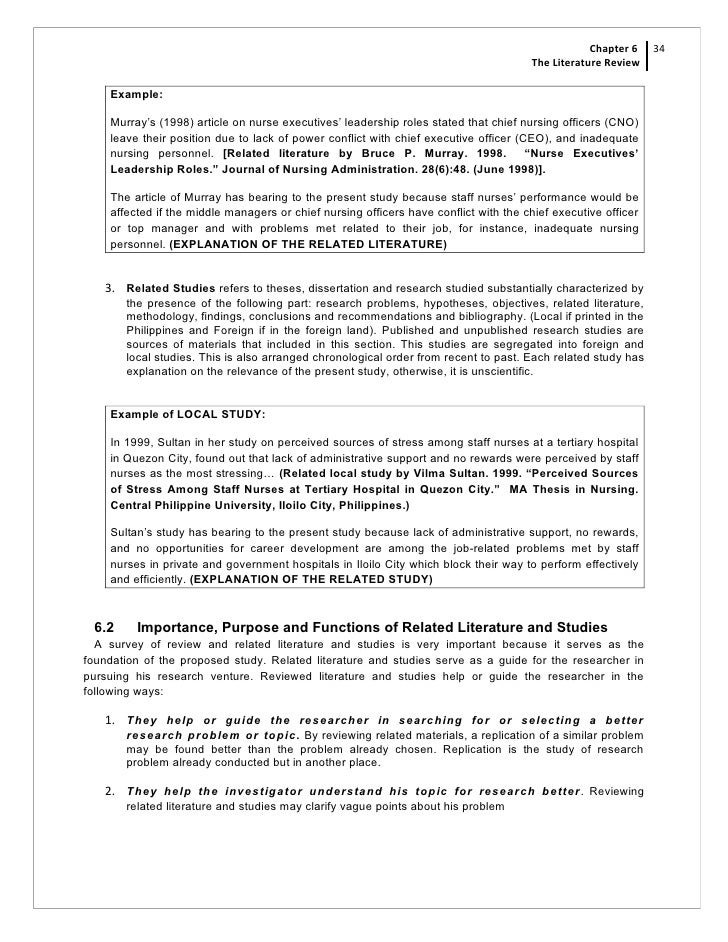

Annals of Applied Statistics 9 4 Rakesh Achanta and Trevor Hastie Telugu OCR Framework using Deep Learning. We build an end-to-end OCR system for Telugu script, that segments the text image, classifies the characters and extracts lines using a language model.

The classification module, which is the most challenging task of the three, is a deep convolutional neural network.

Jingshu Wang, Qingyuan Zhao, Trevor Hastie and Art Owen. Confounder Adjustment in Multiple Hypotheses Testing. accepted, Annals of Statistics, We present a unified framework for analysing different proposals for adjusting for confounders in multiple testing e. in genomics. We also provide an R package cate on CRAN that implements these different approaches. The vignette shows some examples of how to use it. Alexandra Chouldechova and Trevor Hastie Generalized Additive Model Selection A method for selecting terms in an additive model, with sticky selection between null, linear and nonlinear terms, as well as the amount of nonlinearity.

The R package gamsel has been uploaded to CRAN. Trevor Hastie, Rahul Mazumder, Jason Lee and Reza Zadeh. Matrix Completion and Low-Rank SVD via Fast Alternating Least Squares We develop a new method for matrix completion, that improves upon our earlier softImpute algorithm, as well as the popular ALS algorithm. JMLR 16 We have also incorporated this method in our R package softImpute. Ya Le, Trevor Hastie.

Sparse Quadratic Discriminant Analysis and Community Bayes We provide a framework for generalizing naive Bayes classification using sparse graphical models. Naive Bayes assumes conditional on the response, the variables are independent. We relax that, and assume their conditional dependence graph is shared and sparse. William Fithian, Jane Elith, speech recognition using neural networks phd thesis 1995, Trevor Hastie, David A.

Stanford Seminar - Deep Learning in Speech Recognition

, time: 1:13:04Achiever Essays - Your favorite homework help service | Achiever Essays

We build an end-to-end OCR system for Telugu script, that segments the text image, classifies the characters and extracts lines using a language blogger.com classification module, which is the most challenging task of the three, is a deep convolutional neural network A brief summary of the face recognition vendor test (FRVT) , a large scale evaluation of automatic face recognition technology, and its conclusions are also given. Finally, we give a summary Feb 07, · Using sparse representations for missing data imputation in noise robust speech recognition. (European Signal Processing Conf. (EUSIPCO), Lausanne, Switzerland, August ) J. F. Gemmeke and B. Cranen, Noise robust digit recognition using sparse representations

No comments:

Post a Comment